Working on a remote HPC system

Overview

Teaching: 25 min

Exercises: 10 minQuestions

What is an HPC system?

How does an HPC system work?

How do I log on to a remote HPC system?

Objectives

Connect to a remote HPC system.

Understand the general HPC system architecture.

What is an HPC system?

The words “cloud”, “cluster”, and the phrase “high-performance computing” or “HPC” are used a lot in different contexts and with various related meanings. So what do they mean? And more importantly, how do we use them in our work?

The cloud is a generic term commonly used to refer to computing resources that are a) provisioned to users on demand or as needed and b) represent real or virtual resources that may be located anywhere on Earth. For example, a large company with computing resources in Brazil, Zimbabwe and Japan may manage those resources as its own internal cloud and that same company may also utilize commercial cloud resources provided by Amazon or Google. Cloud resources may refer to machines performing relatively simple tasks such as serving websites, providing shared storage, providing webservices (such as e-mail or social media platforms), as well as more traditional compute intensive tasks such as running a simulation.

The term HPC system, on the other hand, describes a stand-alone resource for computationally intensive workloads. They are typically comprised of a multitude of integrated processing and storage elements, designed to handle high volumes of data and/or large numbers of floating-point operations (FLOPS) with the highest possible performance. For example, all of the machines on the Top-500 list are HPC systems. To support these constraints, an HPC resource must exist in a specific, fixed location: networking cables can only stretch so far, and electrical and optical signals can travel only so fast.

The word “cluster” is often used for small to moderate scale HPC resources less impressive than the Top-500. Clusters are often maintained in computing centers that support several such systems, all sharing common networking and storage to support common compute intensive tasks.

Logging in

The first step in using a cluster is to establish a connection from our laptop to the cluster, via the Internet and/or your organisation’s network. We use a program called the Secure SHell (or ssh) client for this. Make sure you have a SSH client installed on your laptop. Refer to the setup section for more details.

Go ahead and log in to the Plato HPC cluster at the University of Saskatchewan.

[user@laptop ~]$ ssh nsid@plato.usask.ca

Remember to replace nsid by your own NSID. You will be asked

for your password; this is the same one you use with other University of Saskatchewan resources, such as the PAWS website. Watch out: the characters you type after the password prompt are not displayed on

the screen. Normal output will resume once you press Enter.

You are logging in using a program known as the secure shell or ssh. This establishes a temporary

encrypted connection between your laptop and plato.usask.ca. The word before the @

symbol, e.g. nsid here, is the user account name that you have permission to use on the

cluster.

Where are we?

Very often, many users are tempted to think of a high-performance computing installation as one

giant, magical machine. Sometimes, people will assume that the computer they’ve logged onto is the

entire computing cluster. So what’s really happening? What computer have we logged on to? The name

of the current computer we are logged onto can be checked with the hostname command. (You may also

notice that the current hostname is also part of our prompt!)

[nsid@platolgn01 ~]$ hostname

platolgn01

What’s in your home directory?

The system administrators may have configured your home directory with some helpful files, folders, and links (shortcuts) to space reserved for you on other filesystems. Take a look around and see what you can find.

Home directory contents vary from user to user. Please discuss any differences you spot with your neighbors.

Hint: The shell commands

pwdandlsmay come in handy.Solution

Use

pwdto print the working directory path:[nsid@platolgn01 ~]$ pwdThe deepest layer should differ: nsid is uniquely yours. Are there differences in the path at higher levels?

You can run

lsto list the directory contents, though it’s possible nothing will show up (if no files have been provided). To be sure, use the-aflag to show hidden files, too.[nsid@platolgn01 ~]$ ls -aAt a minimum, this will show the current directory as

., and the parent directory as...If both of you have empty directories, they will look identical. If you or your neighbor has used the system before, there may be differences. What are you working on?

Nodes

Individual computers that compose a cluster are typically called nodes (although you will also hear people call them servers, computers and machines). On a cluster, there are different types of nodes for different types of tasks. The node where you are right now is called the login node or submit node. A login node serves as an access point to the cluster. As a gateway, it is well suited for uploading and downloading files, setting up software, and running quick tests. It should never be used for doing computationally intensive work.

The real work on a cluster gets done by the worker (or compute) nodes. Worker nodes come in many shapes and sizes, but generally are dedicated to long or hard tasks that require a lot of computational resources.

All interaction with the worker nodes is handled by a specialized piece of software called a scheduler (the scheduler used in this lesson is called ). We’ll learn more about how to use the scheduler to submit jobs next, but for now, it can also tell us more information about the worker nodes.

For example, we can view all of the worker nodes with the sinfo command.

[nsid@platolgn01 ~]$ sinfo

PARTITION AVAIL TIMELIMIT NODES STATE NODELIST

plato_long up 21-00:00:0 25 mix plato[417-421,423,425-426,428-435,437-441,445-448]

plato_long up 21-00:00:0 62 idle plato[225-234,237-238,240-247,309-330,333-337,339-343,346-348,422,424,427,436,442-444]

plato_medium up 4-00:00:00 25 mix plato[417-421,423,425-426,428-435,437-441,445-448]

plato_medium up 4-00:00:00 62 idle plato[225-234,237-238,240-247,309-330,333-337,339-343,346-348,422,424,427,436,442-444]

plato_short up 12:00:00 25 mix plato[417-421,423,425-426,428-435,437-441,445-448]

plato_short up 12:00:00 62 idle plato[225-234,237-238,240-247,309-330,333-337,339-343,346-348,422,424,427,436,442-444]

plato_gpu up 7-00:00:00 4 idle plato-base-gpu-[23003-23004],platogpu[103-104]

plato_gpu_short up 12:00:00 4 idle plato-base-gpu-[23003-23004],platogpu[103-104]

[...]

There are also specialized machines used for managing disk storage, user authentication, and other infrastructure-related tasks. Although we do not typically logon to or interact with these machines directly, they enable a number of key features like ensuring our user account and files are available throughout the HPC system.

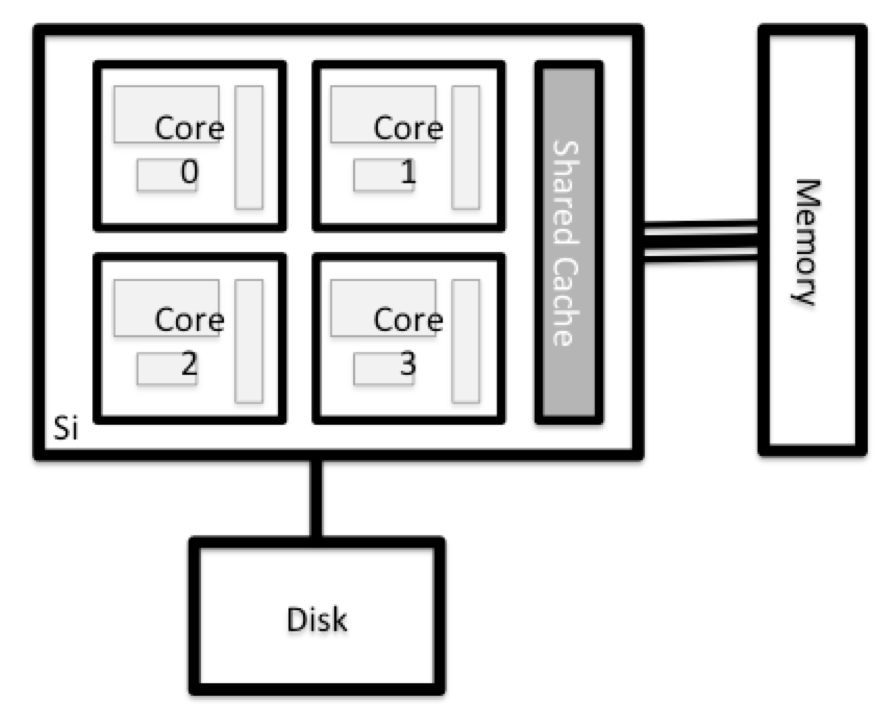

What’s in a node?

All of the nodes in an HPC system have the same components as your own laptop or desktop: CPUs (sometimes also called processors or cores), memory (or RAM), and disk space. CPUs are a computer’s tool for actually running programs and calculations. Information about a current task is stored in the computer’s memory. Disk refers to all storage that can be accessed like a file system. This is generally storage that can hold data permanently, i.e. data is still there even if the computer has been restarted. While this storage can be local (a hard drive installed inside of it), it is more common for nodes to connect to a shared, remote fileserver or cluster of servers.

Explore Your Computer

Try to find out the number of CPUs and amount of memory available on your personal computer.

Note that, if you’re logged in to the remote computer cluster, you need to log out first. To do so, type

Ctrl+dorexit:[nsid@platolgn01 ~]$ exit [user@laptop ~]$Solution

There are several ways to do this. Most operating systems have a graphical system monitor, like the Windows Task Manager. More detailed information can be found on the command line:

- Run system utilities

[user@laptop ~]$ nproc --all [user@laptop ~]$ free -m- Read from

/proc[user@laptop ~]$ cat /proc/cpuinfo [user@laptop ~]$ cat /proc/meminfo- Run system monitor

[user@laptop ~]$ htop

Explore The Head Node

Now compare the resources of your computer with those of the head node.

Solution

[user@laptop ~]$ ssh nsid@plato.usask.ca [nsid@platolgn01 ~]$ nproc --all [nsid@platolgn01 ~]$ free -mYou can get more information about the processors using

lscpu, and a lot of detail about the memory by reading the file/proc/meminfo:[nsid@platolgn01 ~]$ less /proc/meminfoYou can also explore the available filesystems using

dfto show disk free space. The-hflag renders the sizes in a human-friendly format, i.e., GB instead of B. The type flag-Tshows what kind of filesystem each resource is.[nsid@platolgn01 ~]$ df -ThThe local filesystems (ext, tmp, xfs, zfs) will depend on whether you’re on the same login node (or compute node, later on). Networked filesystems (beegfs, cifs, gpfs, nfs, pvfs) will be similar – but may include yourUserName, depending on how it is mounted.

Shared file systems

This is an important point to remember: files saved on one node (computer) are often available everywhere on the cluster!

Explore a Worker Node

Finally, let’s look at the resources available on the worker nodes where your jobs will actually run. Try running this command to see the name, CPUs and memory available on the worker nodes:

[nsid@platolgn01 ~]$ sinfo -n plato418 -o "%n %c %m"

Compare Your Computer, the Head Node and the Worker Node

Compare your laptop’s number of processors and memory with the numbers you see on the cluster head node and worker node. Discuss the differences with your neighbor.

What implications do you think the differences might have on running your research work on the different systems and nodes?

Differences Between Nodes

Many HPC clusters have a variety of nodes optimized for particular workloads. Some nodes may have larger amount of memory, or specialized resources such as Graphical Processing Units (GPUs).

With all of this in mind, we will now cover how to talk to the cluster’s scheduler, and use it to start running our scripts and programs!

Key Points

An HPC system is a set of networked machines.

HPC systems typically provide login nodes and a set of worker nodes.

The resources found on independent (worker) nodes can vary in volume and type (amount of RAM, processor architecture, availability of network mounted file systems, etc.).

Files saved on one node are available on all nodes.